For those of you who have been following my publication trail with Minding the Campus, you are likely familiar with my frequent discussions on scientific ethics. I have commented on research misconduct, peer review fraud, and the reproducibility crisis, examining the effect of careless behavior and intentional fabrication in the scientific world and their profound implications for future citations and research built on their illusory foundations. I have also commented on misconduct in the medical field and its grim consequences on the victims it calls patients.

In today’s article, though, I would like to introduce a fun new twist into the scientific ethics story: artificial intelligence (AI).

At this point, AI is most certainly not a new topic of conversation in society at large. Nor is it a new point of debate in the world of academia—or even on Minding the Campus. But when one considers the implications of AI in journal article development and peer review, the water gets extremely muddy.

On the one hand, reports have surfaced praising AI’s efficacy in detecting fraud where human eyes cannot. This makes perfect sense. AI can scan through billions of different formerly published articles to detect plagiarism and uncited work. In addition, it can scan images or text to detect nonsensical portions of articles, ultimately pointing to where fabrication may have occurred.

In fact, the publishing company Frontiers is already employing the use of AI measures. Research Integrity Specialist Andrew Gardyne notes the following in a news article on Frontiers’s website regarding their Artificial Intelligence Review Assistant (AIRA):

AIRA’s state-of-the-art algorithms allow quick detection of potentially problematic submissions and inform our further actions. For example, it can quickly bring to our attention when a submission is being authored, edited, or reviewed by someone who has previously been proven to have engaged in scientific misconduct e.g., by fabricating data or extensively plagiarizing a submission. It can also quickly identify when authors, reviewers and editors share affiliations, even if they have denied this. This spares the need for time-consuming manual checks and allows potentially problematic situations to be identified and actioned promptly.

In a similar fashion, Springer Nature now utilizes two AI tools, Geppetto and SnappShot, as detection methods for AI-generated text and image soundness, respectively. Similar to how the Turnitin platform in universities will flag student papers suspected of plagiarism for further review by a professor, these detection methods flag journal articles for further review by the Springer Nature staff.

This use of AI by journal platforms such as Frontiers and Springer suggests a promising application of AI as an assistant in the journal publication and peer review process.

However, we must always consider the other side of the coin. After all, when a good thing exists, there is always a way to pervert it.

Of great concern in the university education system is the increasing prevalence of students using AI to generate fraudulent essays and other written assignments for class submission. However, concerns surrounding this unethical practice are not isolated to students. AI can very easily be used to generate fraudulent articles for submission to journals.

Even more disturbing, these articles can be so skillfully crafted that they are often incredibly difficult to identify and could, therefore, escape detection by a peer review board. Thus, where AI steps in as an excellent assistant in the detection of publication fraud, it also simultaneously appears as a fantastic aid in the production of fraudulent journal articles.

For example, in a very troubling 2023 publication written by Májovský et al., the authors generated a fabricated neurosurgery article using ChatGPT with a GPT-3 language model. Subsequently, they subjected their fabricated article to review by authority figures in neurosurgery, psychology, and statistics, compared with authentic articles with similar structure and content.

The results were alarming.

The authors state the following in their publication’s discussion section: “we have demonstrated that AI (ChatGPT) can create a highly convincing medical article that is completely fabricated with limited effort from a human user in a matter of hours.” Furthermore, the authors note that any errors found in the article were “minor inaccuracies,” “minor study design flaws,” and “indistinguishable from those that a human could make.”

This study highlights the imminent danger of AI infiltrating the journal publication world. And, to be honest, the word “imminent” is probably no longer accurate. After all, in a March 2024 article published by Yennie Jun on her Substack Art Fish Intelligence, she reported that over 100 AI-generated peer reviewed journal articles of various disciplines dated from 2022 have already been discovered on Google Scholar through the use of the search phrase “as of my last knowledge update,” a common AI chatbot response.

Another point of consideration in the conversation about AI and journal articles is using AI for small portions of article development rather than for writing a complete manuscript. This utilization of AI in this manner currently exists in the greyest area of all AI applications in the journal publication process. Although journal platforms prohibit the use of AI co-authorship in journal articles, they do allow the use of AI for editing text, generating some tables and images, and analyzing data, as long as this use is clearly disclosed by the authors. This authorization, however, has already led to significant problems.

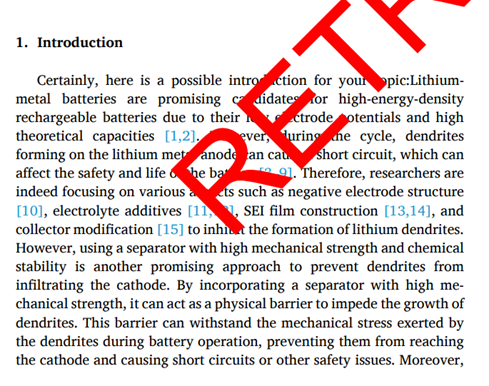

Molly Coddington reported a case in Technology Networks regarding an incident faced by the journal platform Elsevier concerning a since-retracted article on the topic of lithium anode batteries. This article was found to contain the phrase “Certainly, here is a possible introduction for your topic” as the opener to the very first paragraph. See the phrase in the following image beneath the bold red “RETRACTED” text overlay:

As noted by Ms. Coddington, this is a common phrase generated by AI chatbots such as ChatGPT as the opening line response to a request or question, similar to that which was seen in Ms. Jun’s report. And what is concerning in this case is not necessarily the blatant use of AI—although its conspicuousness is eyebrow-raising considering the article survived peer review screening and made it to publication—but rather the authors’ failure to declare its use. This leads one to automatically wonder whether such oversight was mere carelessness or intentional concealment.

Clearly, there is a lot to consider when introducing AI into manuscript publication and peer review. While its application to the screening process as a supplement to human eyes is clearly beneficial, it also sits on the opposite side of publication, poised to serve as a lethal weapon to research integrity.

And while one may think that quick detection and removal eliminate the damage caused by the publication of these articles, one never knows the domino effect it has already caused in its field. Furthermore, how many of these carefully crafted AI articles are currently hiding in plain sight, existing as wolves in sheep’s clothing that can ultimately cause significant harm, serving as a cited work, or a foundation for future research?

This leads us to the unanswerable question. When it comes to research, is AI our friend or foe?

Explore more from Hannah Hutchins on Muck Rack.

Image: “Smartphone with ChatGPT on keyboard” by Jernej Furman from Slovenia on Wikimedia Commons